Pattern Recognition Group

Welcome to the homepage of the "Pattern recognition" group at TU Dortmund University! Our research focuses on the development and application of advanced pattern recognition algorithms and systems for a variety of domains, including document analysis, human activity recognition, remote sensing, natural language processing, and uncertainty quantification of neural networks. Our goal is to push the boundaries of what is currently possible in these fields, and to create innovative solutions that can have a real impact on society.

We are a highly interdisciplinary group, comprising researchers from a range of backgrounds, including computer science, electrical engineering, and mathematics. This diversity of expertise allows us to approach problems from a variety of angles, and to find creative solutions that might not be immediately obvious to researchers working in a single discipline.

If you are interested in our work, we encourage you to browse through our research papers, learn more about our current projects, and get in touch with us to discuss potential collaborations. We are always looking for new ways to collaborate and make a positive impact in the world, and we welcome inquiries from researchers and practitioners alike. Thank you for visiting our homepage, and we hope to hear from you soon!

Project group "HistWeb" submits final report

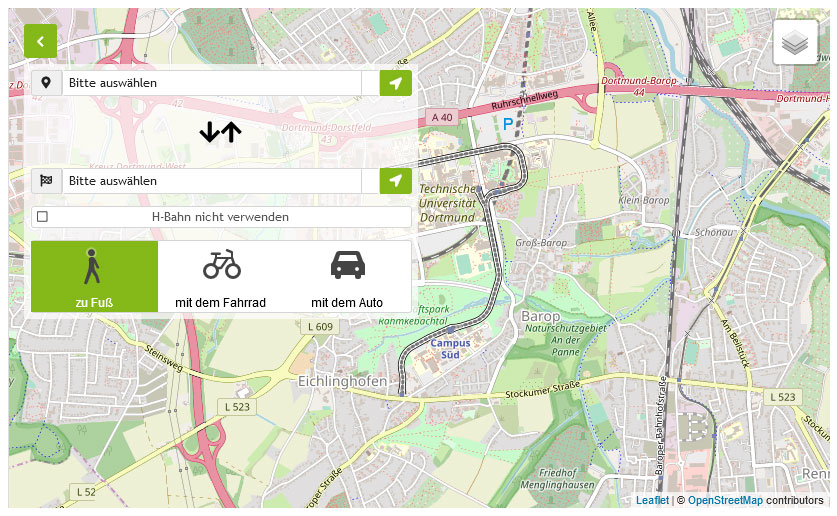

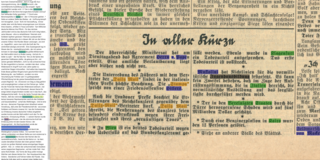

Students from the project group "HistWeb" successfully completed their work on an interactive website for analyzing and managing historical newspapers

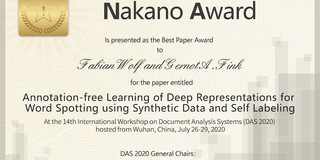

Pattern Recognition Group Wins Nakano Award

This year the Nakano Award honouring the best paper was awarded to Fabian Wolf and Gernot A. Fink.

Unleashing Big Data of the Past – Europe builds a Time Machine

The European Commission has chosen Time Machine as one of the six proposals retained for preparing large scale research initiatives.

Pattern Recognition Group Wins Best Student Paper Award at ICFHR 2018

This year, the IAPR Best Student Paper Award goes to Eugen Rusakov, Leonard Rothacker, Hyunho Mo, and Gernot A. Fink.

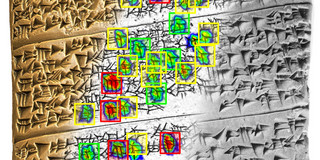

German Research Foundation (DFG) Funds Collaborative Project for the Analysis of Cuneiform Tablets

In the context of the project "Computer-assisted Cuneiform Analysis" (CuKa), the German Research Foundation (DFG) funds a collaboration between the…