Location & approach

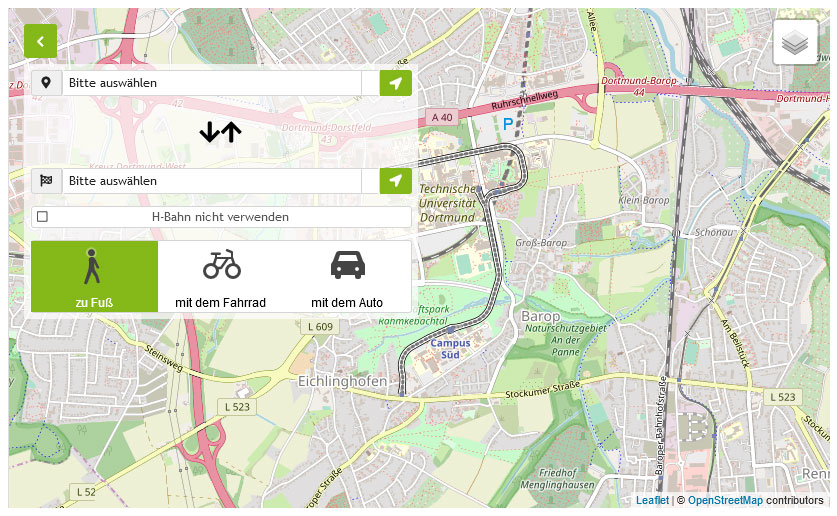

The building OH16 is situated in the campus north of the TU Dortmund, close to the motorway intersection Dortmund West, where the Sauerlandlinie A 45 (Frankfurt-Dortmund) crosses the Ruhrschnellweg B 1 / A 40. From here the university is connected to the west with the motorway network of the Ruhr Area and beyond that to the regions of the Lower Rhine and the Netherlands. In the eastern direction the B 1 leads through Dortmund to Unna, where there is a connection with the motorways leading to Bremen, Hannover/Berlin and Kassel. In the north, the A 45 ends after a few kilometres at the A 2, leading to the Kamener Kreuz and in the other direction to Oberhausen. In the Westhofener Kreuz, south of the university, the A 45 crosses the A 2, leading to Bremen and Köln. The best motorway exit on the A 45 is "Dortmund-Eichlinghofen" and on the B 1 / A 40 "Dortmund-Dorstfeld" or "Dortmund-Barop". There are signs at all exits leading you to the university.

The building OH16 is to be reached via the university´s train station ("Dortmund Universität"). From there suburban trains leave for the Dortmund main station ("Dortmund Hauptbahnhof") and the Düsseldorf main station via the "Düsseldorf Airport train station" (take the S-Bahn number 1 which leaves every 20 or 30 minutes). Also there is the possibilty of getting from Dortmund city to the university by bus and subway: From the Dortmund main station you can take all subways bound for the station "Stadtgarten", usually the underground routes U41, U45, U 47 and U49. At "Stadtgarten" you switch trains and get onto the line U42 towards "Hombruch". The station you have to look out for is "An der Palmweide". From the bus station, busses bound for the university leave every ten minutes (lines 445, 462) the closest stop to the building OH16 is "Joseph v Frauenhofer Str.". For individual timetable information about public passenger traffic see the homepage of the Verkehrsverbund Rhein-Ruhr (that is the regional public transportation system; please visit www.vrr.de).

You can fly from several places in Central Europe directly to Dortmund airport (please visit https://www.dortmund-airport.com). To get from the Dortmund airport to the University, which are approximately 20 kilometers apart, you can use a shuttle bus to the Dortmund main station. Normally the fastest way is to catch a taxi at Dortmund airport.

The Rhein-Ruhr-Airport in Düsseldorf (please visit https://dus.com), 60 kilometers away from Dortmund, offers world-wide air connections. The University can be reached directly using the suburban train S1 to Dortmund main station ("Dortmund Hauptbahnhof") getting off at the university's train station, but normally it is faster to use the regional train ("Regional Express") to Dortmund main station and then take suburban train S1 to Düsseldorf, which does not stop as often as the suburban trains do.getting off at the university's train station (3rd halt).

Plan of site & interactive map

The facilities of TU Dortmund University are located on two campuses, the larger North Campus and the smaller South Campus. In addition, some university facilities are located in the adjacent Dortmund Technology Park as well as in the city center and the wider urban area.

Close Meta-Navigation